Why Aviation's AI Future Hinges on Data Quality

As AI transforms the aviation industry, trusted data remains the foundation of its success. In this report, we explore how the industry uses AI today, the risks of poor data quality, and why a strong data strategy is critical to getting full value from AI.

.png?width=250&height=354&name=data-quality-ai-future%20(1).png)

AI: THE AIRLINE INDUSTRY'S NEXT GOLDEN AGE

Every generation in travel has had its defining technology breakthrough.

Now, travel (and the airline industry) is entering its next golden age: the AI era.

Initiated by the rise of Generative AI in 2022 and now rapidly evolving toward Agentic AI (autonomous systems capable of planning and acting toward defined goals), this new phase represents a far more profound shift.

On the B2C front, it’s changing how travelers dream, plan, and book trips. Instead of clicking through dozens of tabs, travelers are beginning to interact with AI chatbots and (agentic) copilots that design itineraries in seconds and optimize travel deals across millions of options in real time.

But the even bigger transformation might be happening behind the scenes.

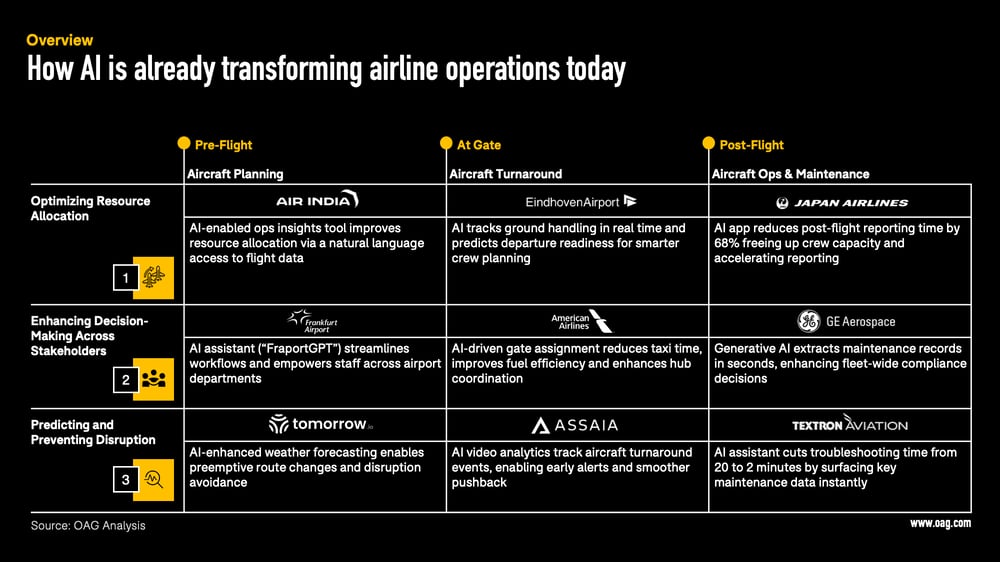

In airline operations, AI is moving from reactive to predictive, and increasingly to proactive, where agentic systems can plan, decide, and act toward operational goals.

- This is helping airlines better forecast demand, prevent delays, streamline turnarounds, manage disruptions, and optimize crew scheduling – all with limited human oversight.

- In our recent research with Microsoft, we mapped out nine concrete operational use cases where AI is already delivering measurable impact across the entire travel journey today, from pre-flight prep to post-flight recovery.

Our report’s key takeaway: AI is no longer an experimental technology; it is becoming a new layer of intelligence that is infiltrating every part of the aviation ecosystem.

And yet, despite the massive hype and potential around AI, one foundational truth remains dangerously underappreciated, especially in a zero-defect industry like aviation: AI is only as good as the data it learns from.

In other words, trusted, high-quality data is the real make-or-break factor for AI’s success in aviation going forward.

This article explores the industry’s hidden vulnerability: a widening data quality gap that threatens to undermine the very AI transformation aviation is betting on.

AI IMPLEMENTATION HITS A DATA REALITY CHECK

Before we uncover aviation’s data gap, let’s take a brief look at the broader state of AI adoption across industries.

Because what we’re witnessing right now is not just another tech wave, it’s a full-blown AI transformation.

Since the public release of ChatGPT in November 2022, Generative AI has triggered unprecedented momentum in organizations of all sizes and sectors.

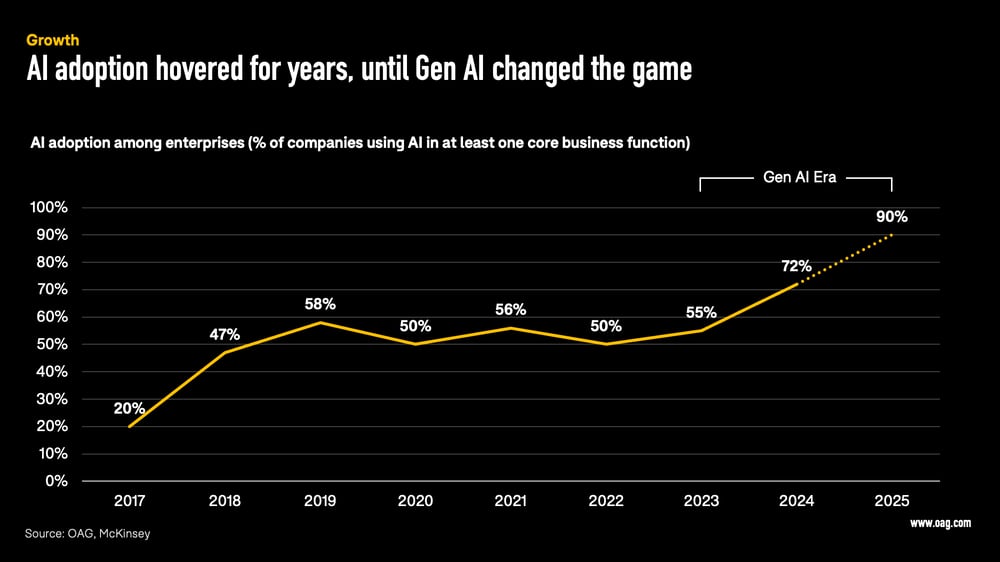

- According to McKinsey, between 2019 and 2023, AI adoption across companies (measured as Gen AI being used in at least one core business area) remained relatively steady, hovering between 50% and 60%.

- But in 2024, with Gen AI moving into the enterprise mainstream, adoption jumped above 70%.

- By the end of 2025, the number is expected to exceed 90% as Gen AI becomes a core capability in nearly every modern organization.

The adoption of Agentic AI (the next stage, where systems don’t just generate content but can plan, decide, and act autonomously in certain domains) is less advanced, given its nascent stage of development, but it could follow the same steep growth trajectory as GenAI. According to Skift Research, one-third of surveyed travel companies are already experimenting with Agentic AI, roughly a quarter have begun scaling it in select business functions, and 80% say that they plan to implement agentic-AI-enabled use cases at scale across many domains and functions within the next three to five years.

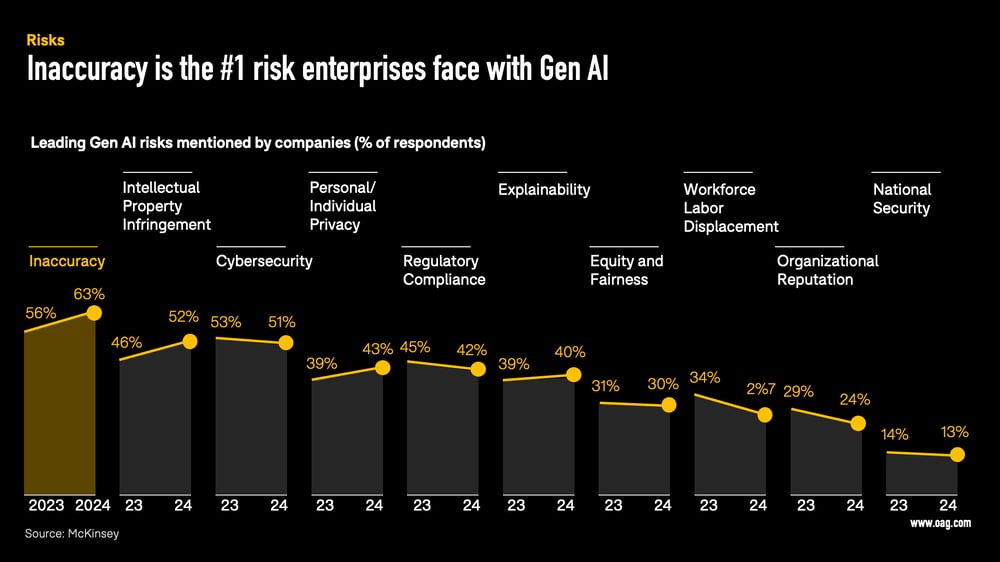

However, as companies scale their AI ambitions, they’re also encountering the realities of implementation, and one risk now overshadows all others: inaccuracy.

Inaccuracy is a direct result of flawed, incomplete, or unstructured input data. AI models, like all machine learning systems, are only as good as the data they are trained and prompted with. When that data is messy, outdated, or biased, the outputs will inevitably reflect those flaws, leading to faulty decisions, reputational damage, and wasted resources.

This is not just theory.

In 2024, nearly two-thirds of enterprise leaders identified inaccuracy as the number one risk of using Generative AI, ranking it even higher than intellectual property misuse or cybersecurity concerns.

This inaccuracy has a clear consequence: bad output. And bad output turns even the most promising AI use cases into failed experiments.

In fact, the cracks are already showing.

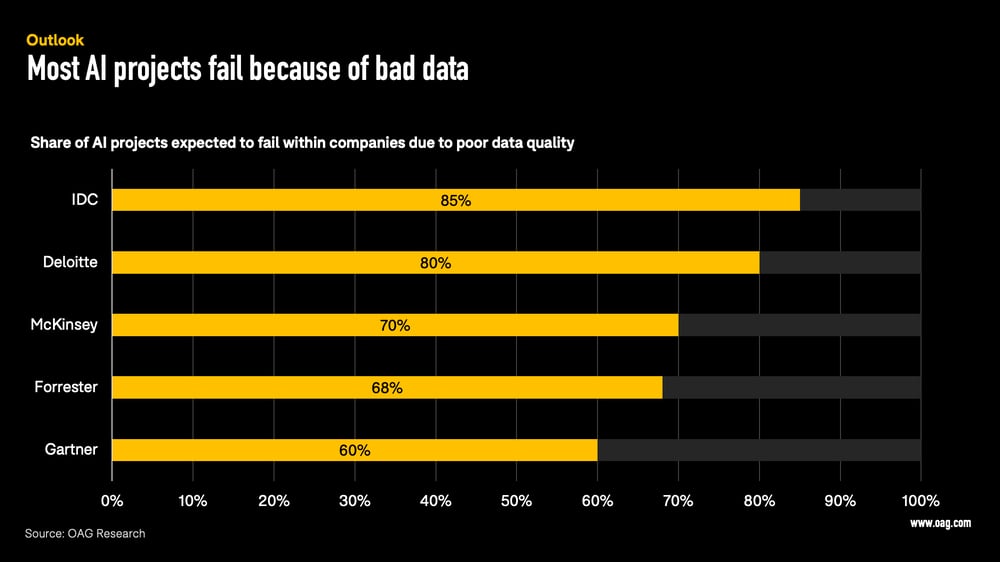

- Leading advisory firms, including Gartner, McKinsey, Forrester, Deloitte, and IDC, predict that between 60% and 85% of all enterprise AI projects will ultimately fail – not because the algorithms and systems aren’t powerful enough, but because the underlying data is not.

- Academic research confirms this outlook. A synthesis of over 100 peer-reviewed studies finds that 68% of failed AI implementations over the past five years can be directly traced to data quality issues.

And it’s not just project failure rates. A widely cited MIT study revealed that 95% of organizations implementing AI systems were seeing zero return on their investments, despite pouring an estimated $30 to $40 billion USD into Generative AI initiatives over the past few years. McKinsey’s research points in the same direction, though at a smaller scale, estimating that around 80% of companies using the latest AI tools have seen no significant impact on either revenue growth or profitability.

While neither study isolates poor data quality as the sole cause, both underscore a widening “AI divide” between companies that build AI on solid data foundations and those that don’t.

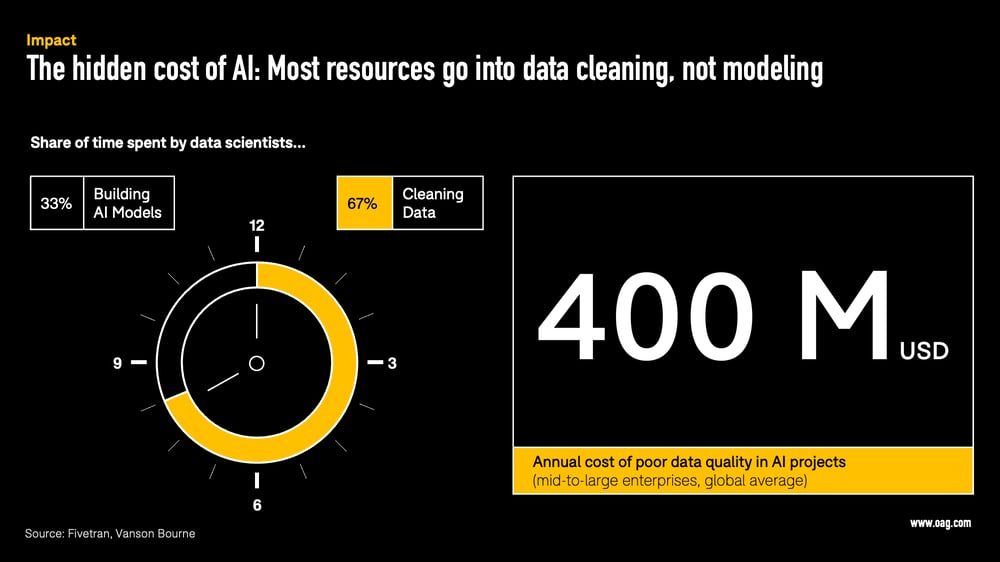

To strengthen their data foundations, organizations now spend vast amounts of time cleaning and validating data, often at the expense of actual model development. Multiple research studies now estimate that data scientists spend between 60 to 80% of their time preparing data, rather than building or optimizing models.

- This painstaking work of cleaning and validating data is costly.

- It is estimated that AI programs trained on inaccurate or incomplete data cost mid-to-large companies over $400 million USD per year on average (or roughly 6% of annual revenue).

In short: the biggest bottleneck in today’s AI revolution isn’t the tech itself. It’s the data feeding it.

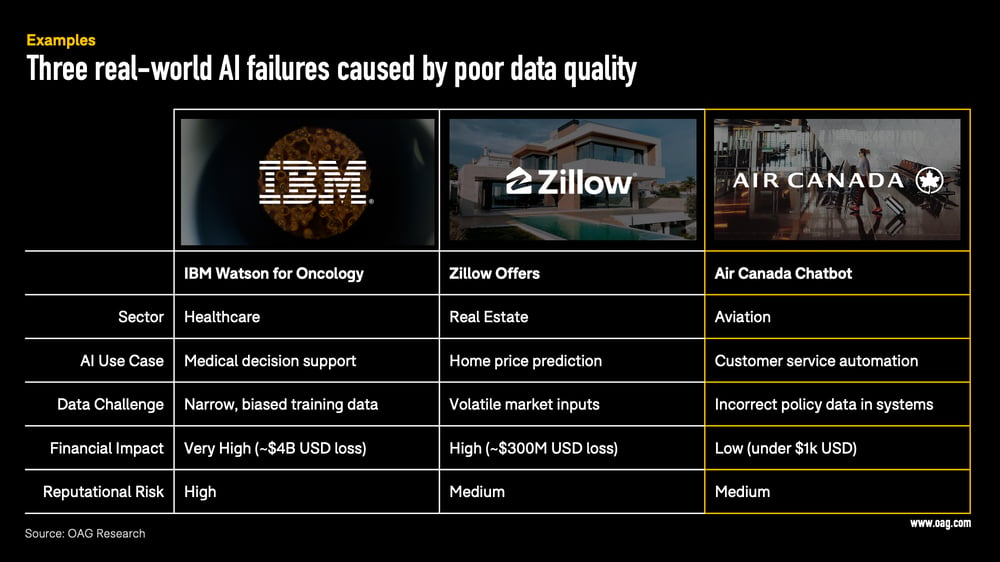

When Bad Data Meets AI: Three Real-World Failures

To make this risk more tangible, let’s examine three real-world examples where flawed data severely compromised AI-driven initiatives, and in some cases, triggered staggering financial consequences.

After gaining global fame by beating human champions on the quiz show Jeopardy! in 2011, IBM set out to turn its Watson AI into a real-world game-changer, starting with healthcare. Its flagship product, Watson for Oncology, was marketed as a revolutionary decision-support tool for oncologists, capable of synthesizing medical literature and clinical data to recommend cancer treatment plans in seconds. But while the ambition was noble, the execution fell short, primarily due to a narrow, U.S.-centric training dataset that ignored the vast clinical diversity of global cancer care.

- Watson repeatedly generated inappropriate or unsafe treatment recommendations, undermining trust among clinicians.

- Its inability to integrate local treatment protocols and adapt to real-world patient cases led to a loss of credibility, ultimately resulting in IBM winding down the project.

The financial fallout?

IBM invested more than $4 billion USD into Watson Health, including costly acquisitions and global rollouts, only to shut down the oncology program and sell off the Watson Health unit in a fire sale to a private equity firm. The case is now widely seen as a cautionary tale of what happens when AI is built on incomplete, biased, or poorly contextualized data.

Zillow, one of the largest online real estate marketplaces in the U.S., launched a bold AI-driven venture in 2018: Zillow Offers. The idea was simple: use a machine learning model to predict home values, make instant cash offers to homeowners, renovate the properties, and flip them for a profit.

But by 2021, the plan had unraveled.

- The algorithm, which relied on Zillow’s proprietary “Zestimate” valuation model, repeatedly misjudged home prices, especially during post-COVID volatility and housing market disruptions.

- For off-market homes, its median error rate spiked as high as 6.9%, leading Zillow to systematically overpay for properties.

The result?

A $304 million USD inventory write-down and mass layoffs affecting 25% of the company’s workforce. Zillow had bought 27,000 homes but managed to sell only 17,000.

The root cause wasn’t a poor model; it was poor data. The model struggled with outdated or incomplete inputs and failed to account for regional market nuances. CEO Rich Barton later acknowledged that while the algorithm could theoretically be refined, the real issue was the unpredictability of the data itself. Zillow pulled the plug, and the story became a textbook example of how AI, without high-quality data, can amplify risk rather than reduce it.

And now, back to aviation.

In 2024, Air Canada was ordered to pay damages after its AI chatbot misinformed a customer about bereavement fares (a type of discounted ticket some airlines offer to passengers who need to travel urgently due to the death or imminent death of a close relative). The bot hallucinated an answer and advised the passenger that he could retroactively apply for a refund; however, this information turned out to be false (and inconsistent with airline policy). After being denied the refund, the passenger took Air Canada to court and won.

While the payout was just CA$812.02, the reputational damage was far more costly.

The case made global headlines and exposed a deeper problem:

- Air Canada argued it wasn’t responsible for the chatbot’s actions, effectively distancing itself from the very AI it had deployed.

- But the court disagreed, emphasizing the airline's obligation to ensure the accuracy of its digital agents.

What’s the core issue here? A failure to properly validate and audit the data and logic that underpin automated systems, especially when they directly interface with customers.

Why This Matters More in Aviation Than Anywhere Else

The three failure cases above are just the tip of the iceberg. They all highlight one universal truth: AI is only as good as the data quality it’s built on.

But in aviation, this data dependency is even more critical.

That’s because Generative AI works differently from all previous forms of software.

- Traditional systems (including earlier AI models based on machine learning) are deterministic: they return the same output every time you feed them the same input.

- Generative AI, on the other hand, is probabilistic in nature. Every output is a “best guess,” based on the statistical likelihood learned from its training data.

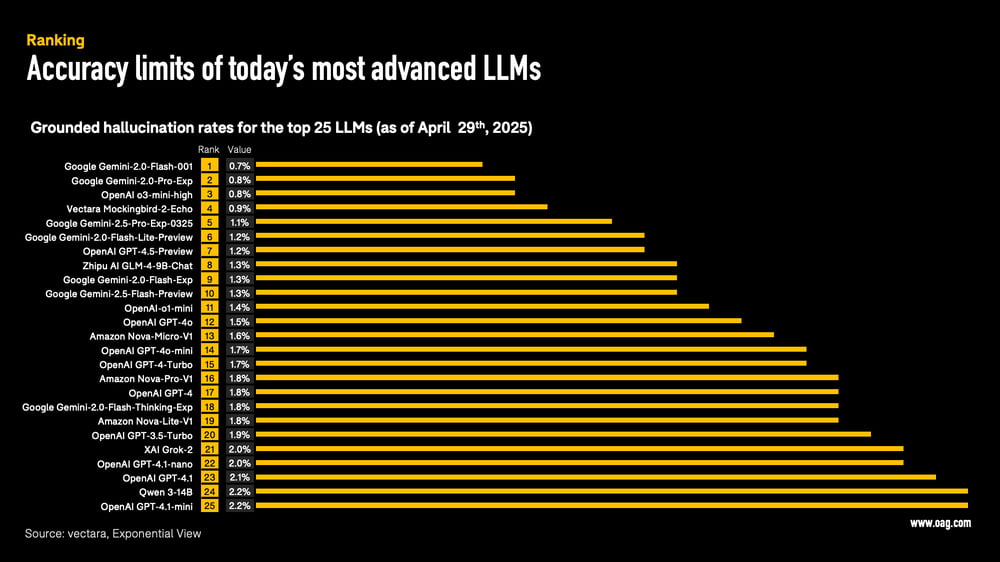

This shift is powerful. It allows GenAI to answer complex questions in natural language, converse fluently, and provide helpful-sounding recommendations – recommendations that Agentic AI systems may even act upon autonomously. But it also introduces something aviation cannot tolerate: an inherent error rate.

While newer models are becoming smarter and more accurate over time, this error rate will never fully disappear.

So what does this mean for aviation?

It depends on where AI is being applied.

In customer-facing applications, like flight search or itinerary planning, a 99% accuracy rate may be acceptable. Assuming the AI model is fed with high-quality data on routes, prices, and schedules, it can create powerful booking experiences.

But when AI enters operational domains, the stakes change.

In flight operations, there is no room for 1% error.

- Not in aircraft turnaround coordination.

- Not in baggage handling.

- Not in crew scheduling or fuel planning.

- And most importantly, not in departure or arrival sequencing.

These are zero-fail environments. You either get it right or you disrupt the system.

And even in booking interfaces, seemingly small errors can break the user experience.

- Imagine a model proposing a flight with a 30-minute layover at Heathrow, when it actually takes 60+ minutes to clear security and change terminals.

- That’s not just a minor slip; it’s a missed connection, it’s a broken customer experience.

This is why aviation requires cleaner, more precise, and more real-time data than almost any other sector.

Whether you’re building a next-gen search tool, an airline chatbot, or an AI-powered operations assistant, your model is only as strong as your data foundation.

At OAG, we don’t just understand this shift.

We enable it.

Because in a world where AI can hallucinate, your data can’t.

Why Schedules Are the Ultimate Stress Test for AI in Aviation

Take something as fundamental as airline schedules.

- On the surface, it looks straightforward: a flight leaves point A at a given time and arrives at point B.

- But in reality, schedules are among the most dynamic and complex datasets in aviation.

- We at OAG process more than 400,000 schedule changes daily, each of which can ripple across airlines, airports, and passengers worldwide.

Airlines tweak their schedules constantly, sometimes months in advance, sometimes hours before departure. They adjust for seasonality, crew availability, airport slots, and weather disruptions. And those disruptions mean actual departure times frequently differ from the published schedule.

Now, imagine an (agentic) AI system building itineraries or running disruption-management analyses based on a publicly crawled schedule feed. The results might look “pretty accurate,” but in this industry, pretty accurate is not good enough. Even small mismatches can quickly spiral into disruption (and missed revenue).

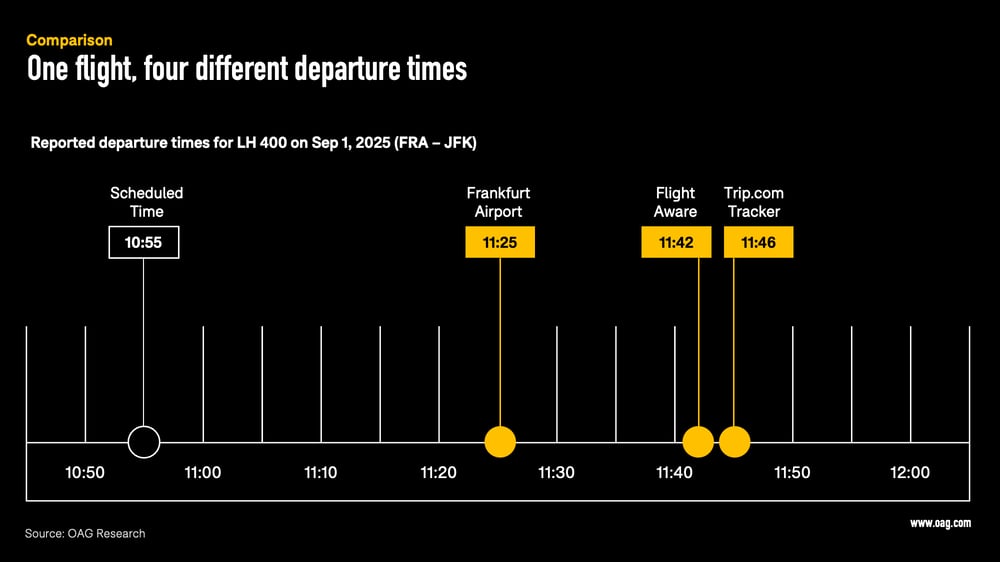

To illustrate:

Generic data pulls (from public web sources or generic AI queries) often provide conflicting data points. Consider Lufthansa Flight LH 400 on September 1, 2025, from Frankfurt (FRA) to New York (JFK).

- The scheduled departure was 10:55 AM.

- But how much later did it actually leave?

- That depends on which public source you trust: Frankfurt Airport listed 11:25 AM on its website, FlightAware had 11:42 AM, and Trip.com tracked 11:46 AM.

If an AI system had to work with these conflicting timestamps, it could:

- Pick the wrong one at random,

- Average them into a false “truth,” or

- Propagate all four into downstream systems.

In every case, the output is unreliable. And unreliability poisons prediction models and disrupts every process that depends on precise timing. In aviation, being off by 20 minutes is not a rounding error; it’s a missed connection, a delayed crew rotation, or a broken customer promise.

And the problems don’t stop at departure times. Public platforms from which the AI would pull its information also disagree on gates and terminals, and most ignore codeshare partners altogether, representing critical details for both operations and passenger experience.

Missed Connections: The Hidden Cost of Inaccurate MCT Data

If schedules are the foundation of aviation, then Minimum Connection Times (MCTs) are the rules that make itineraries viable. MCTs define the minimum time a passenger needs to change planes at an airport, accounting for terminal layouts, border control, and security checks.

The problem? Public data sources often misstate or oversimplify these times.

- At Frankfurt Airport, the official minimum connection time (MCT) by Lufthansa is now 60 minutes. Yet public sources like Wikipedia, regularly scraped by ChatGPT and other AI tools, still list Frankfurt’s MCT at 45 minutes.

- For U.S.-bound connections, most airlines and travel experts recommend leaving 60 to 90 minutes, even though Frankfurt doesn’t publish this as a separate rule.

Now imagine an AI itinerary planner proposing a 45-minute connection at FRA for a U.S.-bound traveler. On paper, it looks doable; in practice, it’s an almost guaranteed misconnection.

Multiply that across thousands of passengers per day, and the costs escalate fast: rebookings, hotel vouchers, meal allowances, and reputational damage.

For airlines, what starts as a 15-minute data inaccuracy can snowball into millions in annual disruption costs.

Predicting Delays With Faulty Data = Broken Models

.gif?width=1000&height=1000&name=BUSIEST%20AIRPORTS%20SQUARE%20(1).gif)

Another data set where precision is non-negotiable is On-Time Performance (OTP).

AI systems designed to forecast delays or optimize crew rotations depend on clean historical performance data.

Here, too, inaccuracies creep in. OAG processes over 2.5 million daily status updates from airlines and airports worldwide. But in public feeds, even a 5% misclassification rate (flagging flights as “on time” when they were delayed, or vice versa) is common.

That may sound minor, but for a large network carrier, it means thousands of flights each month are misrepresented. Feed that into an AI model, and you get skewed predictions: aircraft rotations planned on faulty assumptions, crews misallocated, maintenance windows misjudged.

Conclusion: Your AI Strategy Is Only as Good as Your Data Strategy

Solving the aviation data quality crisis requires more than ad-hoc cleaning jobs. It demands structural change, cultural investment, and the right tools.

- The first step for airlines and travel brands is to anchor their systems in the most accurate, reliable data available. In aviation, that means OAG. Without a single source of truth, every downstream system, whether for disruption management, crew scheduling, or AI itinerary planning, is built on sand.

- Data quality cannot be “everyone’s problem.” When no one is accountable, inconsistencies slip through the cracks. Strong governance policies and clear accountability structures are essential to maintain integrity and consistency.

- AI models cannot be trained once and left to drift. Datasets must be continuously reviewed for bias, labeling errors, and outdated information. Data curation is not a one-off project; it’s an ongoing discipline.

In summary: bad data is the silent killer of AI.

Garbage in, garbage out, except now, it’s garbage at the speed of light. Trusted, curated, and accountable data is the only way to ensure AI delivers on its promise for aviation.

AI won't fix aviation's data problem. Trusted data will.

MEET THE KEY CONTRIBUTORS TO THIS ARTICLE:

Airline Technology

Stay ahead of aviation’s technological evolution. Our reports explore innovations shaping airline operations, from AI integration to dynamic pricing and alternative interlining.

.png)

AI and Airline Operations in collaboration with Microsoft

This extensive report explores how AI transformation and the right data are building resilient airline operations.

How Shopping Data Unlocks Truly Dynamic Offers

This report explains how moving towards a truly data-driven, dynamic offer model could redefine how flights and ancillaries are priced on airline websites.

.png)

The Rise of Alternative Interlining

In this report discover the current Virtual Interlining landscape, airport adaptations and airline involvements, key drivers boosting the Alternative Interlining trend and what does the future hold?

.jpg)

.png)